Technical Details

Michael Dumelle, Matt Higham, and Jay M. Ver Hoef

Source:vignettes/articles/technical.Rmd

technical.RmdIntroduction

This vignette covers technical details regarding the functions in

that perform computations in spmodel. We first provide a

notation guide and then describe relevant details for each function.

If you use spmodel in a formal publication or report,

please cite it. Citing spmodel lets us devote more

resources to it in the future. To view the spmodel

citation, run

citation(package = "spmodel")#> To cite spmodel in publications use:

#>

#> Dumelle M, Higham M, Ver Hoef JM (2023). spmodel: Spatial statistical

#> modeling and prediction in R. PLOS ONE 18(3): e0282524.

#> https://doi.org/10.1371/journal.pone.0282524

#>

#> A BibTeX entry for LaTeX users is

#>

#> @Article{,

#> title = {{spmodel}: Spatial statistical modeling and prediction in {R}},

#> author = {Michael Dumelle and Matt Higham and Jay M. {Ver Hoef}},

#> journal = {PLOS ONE},

#> year = {2023},

#> volume = {18},

#> number = {3},

#> pages = {1--32},

#> doi = {10.1371/journal.pone.0282524},

#> url = {https://doi.org/10.1371/journal.pone.0282524},

#> }Notation Guide

\[\begin{equation*} \begin{split} n & = \text{Sample size} \\ \mathbf{y} & = \text{Response vector} \\ \boldsymbol{\beta} & = \text{Fixed effect parameter vector} \\ \mathbf{X} & = \text{Design matrix of known explanatory variables (covariates)} \\ p & = \text{The number of linearly independent columns in } \mathbf{X} \\ \boldsymbol{\mu} & = \text{Mean vector} \\ \mathbf{w} & = \text{Latent generalized linear model mean on the link scale} \\ \varphi & = \text{Dispersion parameter} \\ \mathbf{Z} & = \text{Design matrix of known random effect variables} \\ \boldsymbol{\theta} & = \text{Covariance parameter vector} \\ \boldsymbol{\Sigma} & = \text{Covariance matrix evaluated at } \boldsymbol{\theta} \\ \boldsymbol{\Sigma}^{-1} & = \text{The inverse of } \boldsymbol{\Sigma} \\ \boldsymbol{\Sigma}^{1/2} & = \text{The square root of } \boldsymbol{\Sigma} \\ \boldsymbol{\Sigma}^{-1/2} & = \text{The inverse of } \boldsymbol{\Sigma}^{1/2} \\ \boldsymbol{\Theta} & = \text{General parameter vector} \\ \ell(\boldsymbol{\Theta}) & = \text{Log-likelihood evaluated at } \boldsymbol{\Theta} \\ \boldsymbol{\tau} & = \text{Spatial (dependent) random error} \\ \boldsymbol{\epsilon} & = \text{Independent (non-spatial) random error} \\ \mathbf{A}^* & = \boldsymbol{\Sigma}^{-1/2}\mathbf{A} \text{ for a general matrix } \mathbf{A} \text{ (this is known as whitening $\mathbf{A}$)} \end{split} \end{equation*}\]A hat indicates the parameters are estimated (i.e., \(\hat{\boldsymbol{\beta}}\)) or evaluated at a relevant estimated parameter vector (e.g., \(\hat{\boldsymbol{\Sigma}}\) is evaluated at \(\hat{\boldsymbol{\theta}}\)). When \(\ell(\boldsymbol{\hat{\Theta}})\) is written, it means the log-likelihood evaluated at its maximum, \(\boldsymbol{\hat{\Theta}}\). When the covariance matrix of \(\mathbf{A}\) is \(\boldsymbol{\Sigma}\), we say \(\mathbf{A}^*\) “whitens” \(\mathbf{A}\) because \[\begin{equation*} \text{Cov}(\mathbf{A}^*) = \text{Cov}(\boldsymbol{\Sigma}^{-1/2}\mathbf{A}) = \boldsymbol{\Sigma}^{-1/2}\text{Cov}(\mathbf{A})\boldsymbol{\Sigma}^{-1/2} = \boldsymbol{\Sigma}^{-1/2}\boldsymbol{\Sigma} \boldsymbol{\Sigma}^{-1/2} = (\boldsymbol{\Sigma}^{-1/2}\boldsymbol{\Sigma}^{1/2})(\boldsymbol{\Sigma}^{1/2}\boldsymbol{\Sigma}^{-1/2}) = \mathbf{I}. \end{equation*}\] Later we discuss how to obtain \(\boldsymbol{\Sigma}^{1/2}\).

Additional notation is used in the predict() section:

\[\begin{equation*}

\begin{split}

\mathbf{y}_o & = \text{Observed response vector} \\

\mathbf{y}_u & = \text{Unobserved response vector} \\

\mathbf{X}_o & = \text{Design matrix of known explanatory

variables at observed response variable locations} \\

\mathbf{X}_u & = \text{Design matrix of known explanatory

variables at unobserved response variable locations} \\

\boldsymbol{\Sigma}_o & = \text{Covariance matrix of

$\mathbf{y}_o$ evaluated at } \boldsymbol{\theta} \\

\boldsymbol{\Sigma}_u & = \text{Covariance matrix of

$\mathbf{y}_u$ evaluated at } \boldsymbol{\theta} \\

\boldsymbol{\Sigma}_{uo} & = \text{A matrix of covariances

between $\mathbf{y}_u$ and $\mathbf{y}_o$ evaluated at }

\boldsymbol{\theta} \\

\mathbf{w}_o & = \text{Latent $\mathbf{w}$ for each observation

in $\mathbf{y}_o$} \\

\mathbf{w}_u & = \text{Latent $\mathbf{w}$ for each observation

in $\mathbf{y}_o$} \\

\mathbf{G}_o & = \text{Hessian for $\mathbf{w}_o$} \\

\end{split}

\end{equation*}\]

Spatial Linear Models

Statistical linear models are often parameterized as \[\begin{equation}\label{eq:lm} \mathbf{y} = \mathbf{X} \boldsymbol{\beta} + \boldsymbol{\epsilon}, \end{equation}\] where for a sample size \(n\), \(\mathbf{y}\) is an \(n \times 1\) column vector of response variables, \(\mathbf{X}\) is an \(n \times p\) design (model) matrix of explanatory variables, \(\boldsymbol{\beta}\) is a \(p \times 1\) column vector of fixed effects controlling the impact of \(\mathbf{X}\) on \(\mathbf{y}\), and \(\boldsymbol{\epsilon}\) is an \(n \times 1\) column vector of random errors. We typically assume that \(\text{E}(\boldsymbol{\epsilon}) = \mathbf{0}\) and \(\text{Cov}(\boldsymbol{\epsilon}) = \sigma^2_\epsilon \mathbf{I}\), where \(\text{E}(\cdot)\) denotes expectation, \(\text{Cov}(\cdot)\) denotes covariance, \(\sigma^2_\epsilon\) denotes a variance parameter, and \(\mathbf{I}\) denotes the identity matrix.

The model \(\mathbf{y} = \mathbf{X} \boldsymbol{\beta} + \boldsymbol{\epsilon}\) assumes the elements of \(\mathbf{y}\) are uncorrelated. Typically for spatial data, elements of \(\mathbf{y}\) are correlated, as observations close together in space tend to be more similar than observations far apart (Tobler 1970). Failing to properly accommodate the spatial dependence in \(\mathbf{y}\) can cause researchers to draw incorrect conclusions about their data. To accommodate spatial dependence in \(\mathbf{y}\), an \(n \times 1\) spatial random effect, \(\boldsymbol{\tau}\), is added to the linear model, yielding the model \[\begin{equation}\label{eq:splm} \mathbf{y} = \mathbf{X} \boldsymbol{\beta} + \boldsymbol{\tau} + \boldsymbol{\epsilon}, \end{equation}\] where \(\boldsymbol{\tau}\) is independent of \(\boldsymbol{\epsilon}\), \(\text{E}(\boldsymbol{\tau}) = \mathbf{0}\), \(\text{Cov}(\boldsymbol{\tau}) = \sigma^2_\tau \mathbf{R}\), \(\mathbf{R}\) is a matrix that determines the spatial dependence structure in \(\mathbf{y}\) and depends on a range parameter, \(\phi\). We discuss \(\mathbf{R}\) in more detail shortly. The parameter \(\sigma^2_\tau\) is called the spatially dependent random error variance or partial sill. The parameter \(\sigma^2_\epsilon\) is called the spatially independent random error variance or nugget. These two variance parameters are henceforth more intuitively written as \(\sigma^2_{de}\) and \(\sigma^2_{ie}\), respectively. The covariance of \(\mathbf{y}\) is denoted \(\boldsymbol{\Sigma}\) and given by \(\sigma^2_{de} \mathbf{R} + \sigma^2_{ie} \mathbf{I}\). The parameters that compose this covariance are contained in the vector \(\boldsymbol{\theta}\), which is called the covariance parameter vector.

The model \(\mathbf{y} = \mathbf{X}

\boldsymbol{\beta} + \boldsymbol{\tau} + \boldsymbol{\epsilon}\)

is called the spatial linear model. The spatial linear model applies to

both point-referenced and areal (i.e., lattice) data. Spatial data are

point-referenced when the elements in \(\mathbf{y}\) are observed at

point-locations indexed by x-coordinates and y-coordinates on a

spatially continuous surface with an infinite number of locations. The

splm() function is used to fit spatial linear models for

point-referenced data (these are sometimes called geostatistical

models). One spatial covariance function available in

splm() is the exponential spatial covariance function,

which has an \(\mathbf{R}\) matrix

given by \[\begin{equation*}

\mathbf{R} = \exp(-\mathbf{M} / \phi),

\end{equation*}\]

where \(\mathbf{M}\) is a matrix of

Euclidean distances among observations. Recall that \(\phi\) is the range parameter, controlling

the behavior of of the covariance function as a function of distance.

Parameterizations for splm() spatial covariance types and

their \(\mathbf{R}\) matrices can be

seen by running help("splm", "spmodel") or

vignette("technical", "spmodel"). Some of these spatial

covariance types (e.g., Matérn) depend on an extra parameter beyond

\(\sigma^2_{de}\), \(\sigma^2_{ie}\), and \(\phi\).

Spatial data are areal when the elements in \(\mathbf{y}\) are observed as part of a

finite network of polygons whose connections are indexed by a

neighborhood structure. For example, the polygons may represent counties

in a state that are neighbors if they share at least one boundary. Areal

data are often equivalently called lattice data (Cressie 1993). The spautor()

function is used to fit spatial linear models for areal data (these are

sometimes called spatial autoregressive models). One spatial

autoregressive covariance function available in spautor()

is the simultaneous autoregressive spatial covariance function, which

has an \(\mathbf{R}\) matrix given by

\[\begin{equation*}

\mathbf{R} = [(\mathbf{I} - \phi \mathbf{W})(\mathbf{I} - \phi

\mathbf{W})^\top]^{-1},

\end{equation*}\] where \(\mathbf{W}\) is a weight matrix describing

the neighborhood structure in \(\mathbf{y}\). Parameterizations for

spautor() spatial covariance types and their \(\mathbf{R}\) matrices can be seen by

running help("spautor", "spmodel") or

vignette("technical", "spmodel").

One way to define \(\mathbf{W}\) is through queen contiguity (Anselin, Syabri, and Kho 2010). Two observations are queen contiguous if they share a boundary. The \(ij\)th element of \(\mathbf{W}\) is then one if observation \(i\) and observation \(j\) are queen contiguous and zero otherwise. Observations are not considered neighbors with themselves, so each diagonal element of \(\mathbf{W}\) is zero.

Sometimes each element in the weight matrix \(\mathbf{W}\) is divided by its respective row sum. This is called row-standardization. Row-standardizing \(\mathbf{W}\) has several benefits, which are discussed in detail by Ver Hoef et al. (2018).

AIC() and AICc()

The AIC() and AICc() functions in

spmodel are defined for restricted maximum likelihood and

maximum likelihood estimation, which maximize a likelihood. The AIC and

AICc as defined by Hoeting et al. (2006)

are given by \[\begin{equation*}\label{eq:sp_aic}

\begin{split}

\text{AIC} & = -2\ell(\hat{\boldsymbol{\Theta}}) +

2(|\hat{\boldsymbol{\Theta}}|) \\

\text{AICc} & = -2\ell(\hat{\boldsymbol{\Theta}}) +

2n(|\hat{\boldsymbol{\Theta}}|) / (n - |\hat{\boldsymbol{\Theta}}| - 1),

\end{split}

\end{equation*}\] where \(|\hat{\boldsymbol{\Theta}}|\) is the

cardinality of \(\hat{\boldsymbol{\Theta}}\). For restricted

maximum likelihood, \(\hat{\boldsymbol{\Theta}} \equiv

\{\hat{\boldsymbol{\theta}}\}\). For maximum likelihood, \(\hat{\boldsymbol{\Theta}} \equiv

\{\hat{\boldsymbol{\theta}}, \hat{\boldsymbol{\beta}}\}\) The

discrepancy arises because restricted maximum likelihood integrates the

fixed effects out of the likelihood, and so the likelihood does not

depend on \(\boldsymbol{\beta}\).

AIC comparisons between a model fit using restricted maximum likelihood and a model fit using maximum likelihood are meaningless, as the models are fit with different likelihoods. AIC comparisons between models fit using restricted maximum likelihood are only valid when the models have the same fixed effect structure. In contrast, AIC comparisons between models fit using maximum likelihood are valid when the models have different fixed effect structures.

anova()

Test statistics from anova() are formed using the

general linear hypothesis test. Let \(\mathbf{L}\) be an \(l \times p\) contrast matrix and \(l_0\) be an \(l

\times 1\) vector. The null hypothesis is that \(\mathbf{L} \boldsymbol{\hat{\beta}} = l_0\)

and the alternative hypothesis is that \(\mathbf{L} \boldsymbol{\hat{\beta}} \neq

l_0\). Usually, \(l_0\) is the

zero vector (in spmodel, this is assumed). The test

statistic is denoted \(Chi2\) and is

given by \[\begin{equation*}\label{eq:glht}

Chi2 = [(\mathbf{L} \boldsymbol{\hat{\beta}} - l_0)^\top(\mathbf{L}

(\mathbf{X}^\top \mathbf{\hat{\Sigma}} \mathbf{X})^{-1}

\mathbf{L}^\top)^{-1}(\mathbf{L} \boldsymbol{\hat{\beta}} - l_0)]

\end{equation*}\] By default, \(\mathbf{L}\) is chosen such that each

variable in the data used to fit the model is tested marginally (i.e.,

controlling for the other variables) against \(l_0 = \mathbf{0}\). If this default is not

desired, the Terms and L arguments can be used

to pass user-defined \(\mathbf{L}\)

matrices to anova(). They must be constructed in such a way

that \(l_0 = \mathbf{0}\).

It is notoriously difficult to determine appropriate p-values for linear mixed models based on the general linear hypothesis test. lme4, for example, does not report p-values by default. A few reasons why obtaining p-values is so challenging:

- The first (and often most important) challenge is that when estimating \(\boldsymbol{\theta}\) using a finite sample, it is usually not clear what the null distribution of \(Chi2\) is. In certain cases such as ordinary least squares regression or some experimental designs (e.g., blocked design, split plot design, etc.), \(Chi2 / rank(\mathbf{L})\) is F-distributed with known numerator and denominator degrees of freedom. But outside of these well-studied cases, no general results exist.

- The second challenge is that the standard error of \(Chi2\) does not account for the uncertainty in \(\boldsymbol{\hat{\theta}}\). For some approaches to addressing this problem, see Kackar and Harville (1984), Prasad and Rao (1990), Harville and Jeske (1992), and Kenward and Roger (1997).

- The third challenge is in determining denominator degrees of freedom. Again, in some cases, these are known – but this is not true in general. For some approaches to addressing this problem, see Satterthwaite (1946), Schluchter and Elashoff (1990), Hrong-Tai Fai and Cornelius (1996), Kenward and Roger (1997), Littell et al. (2006), Pinheiro and Bates (2006), and Kenward and Roger (2009).

For these reasons, spmodel uses an asymptotic (i.e.,

large sample) Chi-squared test when calculating p-values using

anova(). This approach addresses the three points above by

assuming that with a large enough sample size:

- \(Chi2\) is asymptotically Chi-squared (under certain conditions) with \(rank(\mathbf{L})\) degrees of freedom when the null hypothesis is true.

- The uncertainty from estimating \(\boldsymbol{\hat{\theta}}\) is small enough to be safely ignored.

Because the approximation is asymptotic, degree of freedom adjustments can be ignored (it is also worth noting that an F distribution with infinite denominator degrees of freedom is a Chi-squared distribution scaled by \(rank(\mathbf{L})\). This asymptotic approximation implies these p-values are likely unreliable with small samples.

Note that when comparing full and reduced models, the general linear hypothesis test is analogous to an extra sum of (whitened) squares approach (Myers et al. 2012).

A second approach to determining p-values is a likelihood ratio test. Let \(\ell(\boldsymbol{\hat{\Theta}})\) be the log-likelihood for some full model and \(\ell(\boldsymbol{\hat{\Theta}}_0)\) be the log-likelihood for some reduced model. For the likelihood ratio test to be valid, the reduced model must be nested in the full model, which means that \(\ell(\boldsymbol{\hat{\Theta}}_0)\) is obtained by fixing some parameters in \(\boldsymbol{\Theta}\). When the likelihood ratio test is valid, \(X^2 = 2\ell(\boldsymbol{\hat{\Theta}}) - 2\ell(\boldsymbol{\hat{\Theta}}_0)\) is asymptotically Chi-squared with degrees of freedom equal to the difference in estimated parameters between the full and reduced models.

For restricted maximum likelihood estimation, likelihood ratio tests can only be used to compare nested models with the same explanatory variables. To use likelihood ratio tests for comparing different explanatory variable structures, parameters must be estimated using maximum likelihood estimation. When using likelihood ratio tests to assess the importance of parameters on the boundary of a parameter space (e.g., a variance parameter being zero), p-values tend to be too large (Self and Liang 1987; Stram and Lee 1994; Goldman and Whelan 2000; Pinheiro and Bates 2006).

BIC()

The BIC() function in spmodel is defined

for restricted maximum likelihood and maximum likelihood estimation,

which maximize a likelihood. The BIC as defined by Schwarz (1978) is given by \[\begin{equation*}\label{eq:sp_bic}

\text{BIC} = -2\ell(\hat{\boldsymbol{\Theta}}) +

\ln(n)(|\hat{\boldsymbol{\Theta}}|),

\end{equation*}\] where \(n\) is

the sample size and \(|\hat{\boldsymbol{\Theta}}|\) is the

cardinality of \(\hat{\boldsymbol{\Theta}}\). For restricted

maximum likelihood, \(\hat{\boldsymbol{\Theta}} \equiv

\{\hat{\boldsymbol{\theta}}\}\). For maximum likelihood, \(\hat{\boldsymbol{\Theta}} \equiv

\{\hat{\boldsymbol{\theta}}, \hat{\boldsymbol{\beta}}\}\) The

discrepancy arises because restricted maximum likelihood integrates the

fixed effects out of the likelihood, and so the likelihood does not

depend on \(\boldsymbol{\beta}\).

BIC comparisons between a model fit using restricted maximum likelihood and a model fit using maximum likelihood are meaningless, as the models are fit with different likelihoods. BIC comparisons between models fit using restricted maximum likelihood are only valid when the models have the same fixed effect structure. In contrast, BIC comparisons between models fit using maximum likelihood are valid when the models have different fixed effect structures. While BIC was derived by Schwarz (1978) for independent data, Zimmerman and Ver Hoef (2024) show it can be useful for spatially-dependent data as well.

coef()

coef() returns relevant coefficients based on the

type argument. When type = "fixed" (the

default), coef() returns \[\begin{equation*}

\hat{\boldsymbol{\beta}} = (\mathbf{X}^\top

\hat{\boldsymbol{\Sigma}}^{-1} \mathbf{X})^{-1}\mathbf{X}^\top

\hat{\boldsymbol{\Sigma}}^{-1} \mathbf{y} .

\end{equation*}\] If the estimation method is restricted maximum

likelihood or maximum likelihood, \(\hat{\boldsymbol{\beta}}\) is known as the

restricted maximum likelihood or maximum likelihood estimator of \(\boldsymbol{\beta}\). If the estimation

method is semivariogram weighted least squares or semivariogram

composite likelihood, \(\hat{\boldsymbol{\beta}}\) is known as the

empirical generalized least squares estimator of \(\boldsymbol{\beta}\). When

type = "spcov", the estimated spatial covariance parameters

are returned (available for all estimation methods). When

type = "randcov", the estimated random effect variance

parameters are returned (available for restricted maximum likelihood and

maximum likelihood estimation).

confint()

confint() returns confidence intervals for estimated

parameters. Currently, confint() only returns confidence

intervals for \(\boldsymbol{\beta}\).

The \((1 - \alpha)\)% confidence

interval for \(\beta_i\) is \[\begin{equation*}

\hat{\beta}_i \pm z^* \sqrt{(\mathbf{X}^\top

\hat{\boldsymbol{\Sigma}}^{-1} \mathbf{X})^{-1}_{i, i}},

\end{equation*}\] where \((\mathbf{X}^\top \hat{\boldsymbol{\Sigma}}^{-1}

\mathbf{X})^{-1}_{i, i}\) is the \(i\)th diagonal element in \((\mathbf{X}^\top \hat{\boldsymbol{\Sigma}}^{-1}

\mathbf{X})^{-1}\), \(\Phi(z^*) = 1 -

\alpha / 2\), \(\Phi(\cdot)\) is

the standard normal (Gaussian) cumulative distribution function, and

\(\alpha = 1 -\) level,

where level is an argument to confint(). The

default for level is 0.95, which corresponds to a \(z^*\) of approximately 1.96.

cooks.distance()

Cook’s distance measures the influence of an observation (Cook 1979; Cook and Weisberg 1982). An influential observation has a large impact on the model fit. The vector of Cook’s distances for the spatial linear model is given by \[\begin{equation} \label{eq:cooksd} \frac{\mathbf{e}_p^2}{p} \odot diag(\mathbf{H}_s) \odot \frac{1}{1 - diag(\mathbf{H}_s)}, \end{equation}\] where \(\mathbf{e}_p\) are the Pearson residuals and \(diag(\mathbf{H}_s)\) is the diagonal of the spatial hat matrix, \(\mathbf{H}_s \equiv \mathbf{X}^* (\mathbf{X}^{* \top} \mathbf{X}^*)^{-1} \mathbf{X}^{* \top}\) (Montgomery, Peck, and Vining 2021), and \(\odot\) denotes the Hadmard (element-wise) product. The larger the Cook’s distance, the larger the influence.

To better understand the previous form, recall that the the non-spatial linear model \(\mathbf{y} = \mathbf{X} \boldsymbol{\beta} + \boldsymbol{\epsilon}\) assumes elements of \(\boldsymbol{\epsilon}\) are independent and identically distributed (iid) with constant variance. In this context the vector of non-spatial Cook’s distances is given by \[\begin{equation*} \frac{\mathbf{e}_p^2}{p} \odot diag(\mathbf{H}) \odot \frac{1}{1 - diag(\mathbf{H})}, \end{equation*}\] where \(diag(\mathbf{H})\) is the diagonal of the non-spatial hat matrix, \(\mathbf{H} \equiv \mathbf{X} (\mathbf{X}^{\top} \mathbf{X})^{-1} \mathbf{X}^{\top}\). When the elements of \(\boldsymbol{\epsilon}\) are not iid or do not have constant variance or both, the spatial Cook’s distance cannot be calculated using \(\mathbf{H}\). First the linear model must be whitened according to \(\mathbf{y}^* = \mathbf{X}^* \boldsymbol{\beta} + \boldsymbol{\epsilon}^*\), where \(\boldsymbol{\epsilon}^*\) is the whitened version of the sum of all random errors in the model. Then the spatial Cook’s distance follows using \(\mathbf{X}^*\), the whitened version of \(\mathbf{X}\).

deviance()

The deviance of a fitted model is \[\begin{equation*} \mathcal{D}_{\boldsymbol{\Theta}} = 2\ell(\boldsymbol{\Theta}_s) - 2\ell(\boldsymbol{\hat{\Theta}}), \end{equation*}\] where \(\ell(\boldsymbol{\Theta}_s)\) is the log-likelihood of a “saturated” model that fits every observation perfectly. For normal (Gaussian) random errors, \[\begin{equation*} \mathcal{D}_{\boldsymbol{\Theta}} = (\mathbf{y} - \mathbf{X} \hat{\boldsymbol{\beta}})^\top \hat{\boldsymbol{\Sigma}}^{-1} (\mathbf{y} - \mathbf{X} \hat{\boldsymbol{\beta}}) \end{equation*}\]

fitted()

Fitted values can be obtained for the response, spatial random

errors, and random effects. The fitted values for the response

(type = "response"), denoted \(\mathbf{\hat{y}}\), are given by \[\begin{equation*}\label{eq:fit_resp}

\mathbf{\hat{y}} = \mathbf{X} \boldsymbol{\hat{\beta}} .

\end{equation*}\] They are the estimated mean response given the

set of explanatory variables for each observation.

Fitted values for spatial random errors (type = "spcov")

and random effects (type = "randcov") are linked to best

linear unbiased predictors from linear mixed model theory. Consider the

standard random effects parameterization \[\begin{equation*}

\mathbf{y} = \mathbf{X} \boldsymbol{\beta} + \mathbf{Z} \mathbf{u} +

\boldsymbol{\epsilon},

\end{equation*}\] where \(\mathbf{Z}\) denotes the random effects

design matrix, \(\mathbf{u}\) denotes

the random effects, and \(\boldsymbol{\epsilon}\) denotes independent

random error. Henderson (1975) states that

the best linear unbiased predictor (BLUP) of a single random effect

vector \(\mathbf{u}\), denoted \(\mathbf{\hat{u}}\), is given by \[\begin{equation}\label{eq:blup_mm}

\mathbf{\hat{u}} = \sigma^2_u \mathbf{Z}^\top

\mathbf{\Sigma}^{-1}(\mathbf{y} - \mathbf{X} \boldsymbol{\hat{\beta}}),

\end{equation}\] where \(\sigma^2_u\) is the variance of \(\mathbf{u}\).

Searle, Casella, and McCulloch (2009) generalize this idea by showing that for a random vector \(\boldsymbol{\alpha}\) in a linear model, the best linear unbiased predictor (based on the response, \(\mathbf{y}\)) of \(\boldsymbol{\alpha}\), denoted \(\boldsymbol{\hat{\alpha}}\), is given by \[\begin{equation}\label{eq:blup_gen} \boldsymbol{\hat{\alpha}} = \text{E}(\boldsymbol{\alpha}) + \boldsymbol{\Sigma}_\alpha \boldsymbol{\Sigma}^{-1}(\mathbf{y} - \mathbf{X} \boldsymbol{\hat{\beta}}), \end{equation}\] where \(\boldsymbol{\Sigma}_\alpha = \text{Cov}(\boldsymbol{\alpha}, \mathbf{y})\). Evaluating this equation at the plug-in (empirical) estimates of the covariance parameters yields the empirical best linear unbiased predictor (EBLUP) of \(\boldsymbol{\alpha}\).

Recall that the spatial linear model with random effects is \[\begin{equation*} \mathbf{y} = \mathbf{X} \boldsymbol{\beta} + \mathbf{Z} \mathbf{u} + \boldsymbol{\tau} + \boldsymbol{\epsilon}, \end{equation*}\] Building from previous results, we can find BLUPs for each random term in the spatial linear model (\(\mathbf{u}\), \(\boldsymbol{\tau}\), and \(\boldsymbol{\epsilon}\)). For example, the BLUP of \(\mathbf{u}\) is found by noting that \(\text{E}(\mathbf{u}) = \mathbf{0}\) and \[\begin{equation*} \mathbf{\Sigma}_u = \text{Cov}(\mathbf{u}, \mathbf{y}) = \text{Cov}(\mathbf{u}, \mathbf{X} \boldsymbol{\beta} + \mathbf{Z} \mathbf{u} + \boldsymbol{\tau} + \boldsymbol{\epsilon}) = \text{Cov}(\mathbf{u}, \mathbf{Z}\mathbf{u}) = \text{Cov}(\mathbf{u}, \mathbf{u})\mathbf{Z}^\top = \sigma^2_u \mathbf{Z}^\top, \end{equation*}\] where the result follows because the random terms in \(\mathbf{y}\) are independent and \(\text{Cov}(\mathbf{u}, \mathbf{u}) = \sigma^2_u \mathbf{I}\). Then it follows that \[\begin{equation*} \hat{\mathbf{u}} = \text{E}(\mathbf{u}) + \boldsymbol{\Sigma}_u \boldsymbol{\Sigma}^{-1}(\mathbf{y} - \mathbf{X} \boldsymbol{\hat{\beta}}) = \sigma^2_u \mathbf{Z}^\top \boldsymbol{\Sigma}^{-1}(\mathbf{y} - \mathbf{X} \boldsymbol{\hat{\beta}}), \end{equation*}\] which matches the previous form of the BLUP. Similarly, the BLUP of \(\boldsymbol{\tau}\) is found by noting that \(\text{E}(\boldsymbol{\tau}) = \mathbf{0}\) and \[\begin{equation*} \mathbf{\Sigma}_{de} = \text{Cov}(\boldsymbol{\tau}, \mathbf{y}) = \text{Cov}(\boldsymbol{\tau}, \mathbf{X} \boldsymbol{\beta} + \mathbf{Z} \mathbf{u} + \boldsymbol{\tau} + \boldsymbol{\epsilon}) = \text{Cov}(\boldsymbol{\tau}, \boldsymbol{\tau}) = \sigma^2_{de} \mathbf{R}, \end{equation*}\] where the result follows because the random terms in \(\mathbf{y}\) are independent and \(\text{Cov}(\boldsymbol{\tau}, \boldsymbol{\tau}) = \sigma^2_{de} \mathbf{R}\), and \(\sigma^2_{de}\) is the variance of \(\boldsymbol{\tau}\). Then it follows that \[\begin{equation}\label{eq:blup_sp} \hat{\boldsymbol{\tau}} = \text{E}(\boldsymbol{\tau}) + \boldsymbol{\Sigma}_{de} \boldsymbol{\Sigma}^{-1}(\mathbf{y} - \mathbf{X} \boldsymbol{\hat{\beta}}) = \sigma^2_{de} \mathbf{R} \boldsymbol{\Sigma}^{-1}(\mathbf{y} - \mathbf{X} \boldsymbol{\hat{\beta}}). \end{equation}\] Fitted values for \(\boldsymbol{\epsilon}\) are obtained using similar arguments. Evaluating these equations at the plug-in (empirical) estimates of the covariance parameters yields EBLUPs.

When partition factors are used, the covariance matrix of all random effects (spatial and non-spatial) can be viewed as the interaction between the non-partitioned covariance matrix and the partition matrix, \(\mathbf{P}\). The \(ij\)th entry in \(\mathbf{P}\) equals one if observation \(i\) and observation \(j\) share the same level of the partition factor and zero otherwise. For spatial random effects, an adjustment is straightforward, as each column in \(\boldsymbol{\Sigma}_{de}\) corresponds to a distinct spatial random effect. Thus with partition factors, \(\boldsymbol{\Sigma}_{de}^* = \boldsymbol{\Sigma}_{de} \odot \mathbf{P} = \sigma^2_{de} \mathbf{R} \odot \mathbf{P}\), where \(\odot\) denotes the Hadmart (element-wise) product, is used instead of \(\boldsymbol{\Sigma}_{de}\). Note that \(\boldsymbol{\Sigma}_{ie}\) is unchanged as it is proportional to the identity matrix. For non-spatial random effects, however, the situation is more complicated. Applying the BLUP formula directly yields BLUPs of random effects corresponding to the interaction between random effect levels and partition levels. Thus a logical approach is to average the non-zero BLUPs for each random effect level across partition levels, yielding a prediction for the random effect level. This does not imply, however, that these estimates are BLUPs of the random effect.

For big data without partition factors, the local indexes act as partition factors. That is, the BLUPs correspond to random effects interacted with each local index. For big data with partition factors, an adjusted partition factor is created as the interaction between each local index and the partition factor. Then this adjusted partition factor is applied to yield \(\hat{\boldsymbol{\alpha}}\).

hatvalues()

Hat values measure the leverage of an observation. An observation has high leverage if its combination of explanatory variables is atypical (far from the mean explanatory vector). The spatial leverage (hat) matrix is given by \[\begin{equation} \label{eq:leverage} \mathbf{H}_s = \mathbf{X}^* (\mathbf{X}^{* \top} \mathbf{X}^*)^{-1} \mathbf{X}^{* \top}. \end{equation}\] The diagonal of this matrix yields the leverage (hat) values for each observation (Montgomery, Peck, and Vining 2021). The larger the hat value, the larger the leverage.

To better understand the previous form, recall that the the non-spatial linear model \(\mathbf{y} = \mathbf{X} \boldsymbol{\beta} + \boldsymbol{\epsilon}\) assumes elements of \(\boldsymbol{\epsilon}\) are independent and identically distributed (iid) with constant variance. In this context, the leverage (hat) matrix is given by \[\begin{equation*} \mathbf{H} \equiv \mathbf{X} (\mathbf{X}^{\top} \mathbf{X})^{-1} \mathbf{X}^{\top}, \end{equation*}\] When the elements of \(\boldsymbol{\epsilon}\) are not iid or do not have constant variance or both, the spatial leverage (hat) matrix is not \(\mathbf{H}\). First the linear model must be whitened according to \(\mathbf{y}^* = \mathbf{X}^* \boldsymbol{\beta} + \boldsymbol{\epsilon}^*\), where \(\boldsymbol{\epsilon}^*\) is the whitened version of the sum of all random errors in the model. Then the spatial leverage (hat) matrix follows using \(\mathbf{X}^*\), the whitened version of \(\mathbf{X}\).

loocv()

\(k\)-fold cross validation is a

useful tool for evaluating model fits using “hold-out” data. The data

are split into \(k\) sets. One-by-one,

one of the \(k\) sets is held out, the

model is fit to the remaining \(k - 1\)

sets, and predictions at each observation in the hold-out set are

compared to their true values. The closer the predictions are to the

true observations, the better the model fit. A special case where \(k = n\) is known as leave-one-out cross

validation (loocv), as each observation is left out one-by-one.

Computationally efficient solutions exist for leave-one-out cross

validation in the non-spatial linear model (with iid, constant variance

errors). Outside of this case, however, fitting \(n\) separate models can be computationally

infeasible. loocv() makes a compromise that balances an

approximation to the true solution with computational feasibility. First

\(\boldsymbol{\theta}\) is estimated

using all of the data. Then for each of the \(n\) model fits, loocv() does

not re-estimate \(\boldsymbol{\theta}\)

but does re-estimate \(\boldsymbol{\beta}\). This approach relies

on the assumption that the covariance parameter estimates obtained using

\(n - 1\) observations are

approximately the same as the covariance parameter estimates obtained

using all \(n\) observations. For a

large enough sample size, this is a reasonable assumption.

First define \(\boldsymbol{\Sigma}_{-i, -i}\) as \(\boldsymbol{\Sigma}\) with the \(i\)th row and column deleted, \(\boldsymbol{\Sigma}_{i, -i}\) as the \(i\)th row of \(\boldsymbol{\Sigma}\) with the \(i\)th column deleted, \(\boldsymbol{\Sigma}_{i, i}\) as the \(i\)th diagonal element of \(\boldsymbol{\Sigma}\), \(\mathbf{X}_{-i}\) as \(\mathbf{X}\) with the \(i\)th row deleted, \(\mathbf{X}_{i}\) as the \(i\)th row of \(\mathbf{X}\), \(y_{-i}\) as \(\mathbf{y}\) with the \(i\)th element deleted, and \(\mathbf{y}_i\) as the \(i\)th element of \(\mathbf{y}\). Wolf (1978) shows that given \(\boldsymbol{\Sigma}^{-1}\), a computationally efficient form for \(\boldsymbol{\Sigma}^{-1}_{-i}\) exists. First observe that \(\boldsymbol{\Sigma}^{-1}\) can be represented blockwise as \[\begin{equation*} \boldsymbol{\Sigma}^{-1} = \begin{bmatrix} \tilde{\boldsymbol{\Sigma}}_{-i, -i} & \tilde{\boldsymbol{\Sigma}}_{i,-i}^\top \\ \tilde{\boldsymbol{\Sigma}}_{i,-i} & \tilde{\boldsymbol{\Sigma}}_{i, i} \end{bmatrix}, \end{equation*}\] where the dimensions of each \(\tilde{\boldsymbol{\Sigma}}\) match the respective dimensions of relevant blocks in \(\boldsymbol{\Sigma}\). Then it follows that \[\begin{equation*} \boldsymbol{\Sigma}^{-1}_{-i, -i} = \tilde{\boldsymbol{\Sigma}}_{-i, -i} - \tilde{\boldsymbol{\Sigma}}_{i,-i}^\top \tilde{\boldsymbol{\Sigma}}_{i, i}^{-1}\tilde{\boldsymbol{\Sigma}}_{i,-i} \end{equation*}\] and \[\begin{equation*} \boldsymbol{\beta}_{-i} = (\mathbf{X}^\top_{-i} \boldsymbol{\Sigma}^{-1}_{-i, -i} \mathbf{X}_{-i})^{-1} \mathbf{X}^\top_{-i} \boldsymbol{\Sigma}^{-1}_{-i, -i} \mathbf{y}_{-i}, \end{equation*}\] where \(\boldsymbol{\beta}_i\) is the estimate of \(\boldsymbol{\beta}\) constructed without the \(i\)th observation.

The loocv prediction of \(y_i\) is then given by \[\begin{equation*} \hat{y}_i = \mathbf{X}_i \hat{\boldsymbol{\beta}}_{-i} + \hat{\boldsymbol{\Sigma}}_{i, -i}\hat{\boldsymbol{\Sigma}}_{-i, -i}(\mathbf{y}_i - \mathbf{X}_{-i} \hat{\boldsymbol{\beta}}_{-i}) \end{equation*}\] and the prediction variance of the loocv prediction of \(y_i\) is given by \[\begin{equation*} \dot{\sigma}^2_i = \hat{\boldsymbol{\Sigma}}_{i, i} - \hat{\boldsymbol{\Sigma}}_{i, - i} \hat{\boldsymbol{\Sigma}}^{-1}_{-i, -i} \hat{\boldsymbol{\Sigma}}_{i, - i}^\top + \mathbf{Q}_i(\mathbf{X}_{-i}^\top \hat{\boldsymbol{\Sigma}}_{-i, -i}^{-1} \mathbf{X}_{-i})^{-1}\mathbf{Q}_i^\top , \end{equation*}\] where \(\mathbf{Q}_i = \mathbf{X}_i - \hat{\boldsymbol{\Sigma}}_{i, -i} \hat{\boldsymbol{\Sigma}}^{-1}_{-i, -i} \mathbf{X}_{-i}\). These formulas are analogous to the formulas used to obtain linear unbiased predictions of unobserved data and prediction variances. Model fits are evaluated using several statistics: bias, mean-squared-prediction error (MSPE), root-mean-squared-prediction error (RMSPE), and the squared correlation (cor2) between the observed data and leave-one-out predictions (regarded as a prediction version of r-squared appropriate for comparing across spatial and nonspatial models).

Bias is formally defined as \[\begin{equation*} bias = \frac{1}{n}\sum_{i = 1}^n(y_i - \hat{y}_i). \end{equation*}\]

MSPE is formally defined as \[\begin{equation*} MSPE = \frac{1}{n}\sum_{i = 1}^n(y_i - \hat{y}_i)^2. \end{equation*}\]

RMSPE is formally defined as \[\begin{equation*} RMSPE = \sqrt{\frac{1}{n}\sum_{i = 1}^n(y_i - \hat{y}_i)^2}. \end{equation*}\]

cor2 is formally defined as \[\begin{equation*} cor2 = \text{Cor}(\mathbf{y}, \hat{\mathbf{y}})^2, \end{equation*}\] where Cor\((\cdot)\) is the correlation function. cor2 is only returned for spatial linear models, as it is not applicable for spatial generalized linear models (we are predicting a latent mean parameter, which is unknown and not on the same scale as the original data).

Generally, bias should be near zero for well-fitting models. The lower the MSPE and RMSPE, the better the model fit. The higher the cor2, the better the model fit.

Big Data

Options for big data leave-one-out cross validation rely on the

local argument, which is passed to predict().

The local list for predict() is explained in

detail in the predict() section, but we provide a short

summary of how local interacts with loocv()

here.

For splm() and spautor() objects, the

local method can be "all". When the

local method is"all", all of the data are used

for leave-one-out cross validation (i.e., it is implemented exactly as

previously described). Parallelization is implemented when setting

parallel = TRUE in local, and the number of

cores to use for parallelization is specified via

ncores.

For splm() objects, additional options for the

local method are "covariance" and

"distance". When the local method is

"covariance", then a number of observations (specified via

the size argument) having the highest covariance with the

held-out observation are used in the local neighborhood prediction

approach. When the local method is "distance",

then a number of observations (specified via the size

argument) closest to the held-out observation are used in the local

neighborhood prediction approach. When no random effects are used, no

partition factor is used, and the spatial covariance function is

monotone decreasing, "covariance" and

"distance" are equivalent. The local neighborhood approach

only uses the observations in the local neighborhood of the held-out

observation to perform prediction, and is thus an approximation to the

true solution. Its computational efficiency derives from using \(\boldsymbol{\Sigma}_{l, l}\) (the

covariance matrix of the observations in the local neighborhood) instead

of \(\boldsymbol{\Sigma}\) (the

covariance matrix of all the observations). Parallelization is

implemented when setting parallel = TRUE in

local, and the number of cores to use for parallelization

is specified via ncores.

predict()

interval = "none"

The empirical best linear unbiased predictions (i.e., empirical Kriging predictor) of \(\mathbf{y}_u\) are given by \[\begin{equation}\label{eq:blup} \mathbf{\dot{y}}_u = \mathbf{X}_u \hat{\boldsymbol{\beta}} + \hat{\boldsymbol{\Sigma}}_{uo} \hat{\boldsymbol{\Sigma}}^{-1}_{o} (\mathbf{y}_o - \mathbf{X}_o \hat{\boldsymbol{\beta}}) . \end{equation}\]

This equation sometimes called an empirical universal Kriging predictor, a Kriging with external drift predictor, or a regression Kriging predictor.

The covariance matrix of \(\mathbf{\dot{y}}_u\) \[\begin{equation}\label{eq:blup_cov} \dot{\boldsymbol{\Sigma}}_u = \hat{\boldsymbol{\Sigma}}_u - \hat{\boldsymbol{\Sigma}}_{uo} \hat{\boldsymbol{\Sigma}}^{-1}_o \hat{\boldsymbol{\Sigma}}^\top_{uo} + \mathbf{Q}(\mathbf{X}_o^\top \hat{\boldsymbol{\Sigma}}_o^{-1} \mathbf{X}_o)^{-1}\mathbf{Q}^\top , \end{equation}\] where \(\mathbf{Q} = \mathbf{X}_u - \hat{\boldsymbol{\Sigma}}_{uo} \hat{\boldsymbol{\Sigma}}^{-1}_o \mathbf{X}_o\).

When se.fit = TRUE, standard errors are returned by

taking the square root of the diagonal of \(\dot{\boldsymbol{\Sigma}}_u\).

interval = "prediction"

The empirical best linear unbiased predictions are returned as \(\mathbf{\dot{y}}_u\). The (100 \(\times\) level)% prediction

interval for \((y_u)_i\) is \((\dot{y}_u)_i \pm z^*

\sqrt{(\dot{\boldsymbol{\Sigma}}_u)_{i, i}}\), where \(\sqrt{(\dot{\boldsymbol{\Sigma}}_u)_{i,

i}}\) is the standard error of \((\dot{y}_u)_i\) obtained from

se.fit = TRUE, \(\Phi(z^*) = 1 -

\alpha / 2\), \(\Phi(\cdot)\) is

the standard normal (Gaussian) cumulative distribution function, \(\alpha = 1 -\) level, and

level is an argument to predict(). The default

for level is 0.95, which corresponds to a \(z^*\) of approximately 1.96.

interval = "confidence"

The best linear unbiased estimates of \(\text{E}[(y_u)_i]\) (\(\text{E}(\cdot)\) denotes expectation) are

returned by evaluating \((\mathbf{X}_u)_i

\hat{\boldsymbol{\beta}}\), where \((\mathbf{X}_u)_i\) is the \(i\)th row of \(\mathbf{X}_u\) (i.e., fitted values

corresponding to \((\mathbf{X}_u)_i\)

are returned). The (100 \(\times\)

level)% confidence interval for \(\text{E}[(y_u)_i]\) is \((\mathbf{X}_u)_i \hat{\boldsymbol{\beta}} \pm z^*

\sqrt{(\mathbf{X}_u)_i (\mathbf{X}^\top_o

\hat{\boldsymbol{\Sigma}}_o^{-1} \mathbf{X}_o)^{-1}

(\mathbf{X}_u)_i^\top}\) where \(\sqrt{(\mathbf{X}_u)_i (\mathbf{X}^\top_o

\hat{\boldsymbol{\Sigma}}_o^{-1} \mathbf{X}_o)^{-1}

(\mathbf{X}_u)_i^\top}\) is the standard error of \((\dot{y}_u)_i\) obtained from

se.fit = TRUE, \(\Phi(z^*) = 1 -

\alpha / 2\), \(\Phi(\cdot)\) is

the standard normal (Gaussian) cumulative distribution function, \(\alpha = 1 -\) level, and

level is an argument to predict(). The default

for level is 0.95, which corresponds to a \(z^*\) of approximately 1.96.

spautor() extra steps

For spatial autoregressive models, an extra step is required to obtain \(\hat{\boldsymbol{\Sigma}}^{-1}_o\), \(\hat{\boldsymbol{\Sigma}}_u\), and \(\hat{\boldsymbol{\Sigma}}_{uo}\) as they depend on one another through the neighborhood structure of \(\mathbf{y}_o\) and \(\mathbf{y}_u\). Recall that for autoregressive models, it is \(\boldsymbol{\Sigma}^{-1}\) that is straightforward to obtain, not \(\boldsymbol{\Sigma}\).

Let \(\boldsymbol{\Sigma}^{-1}\) be the inverse covariance matrix of the observed and unobserved data, \(\mathbf{y}_o\) and \(\mathbf{y}_u\). One approach to obtain \(\boldsymbol{\Sigma}_o\) and \(\boldsymbol{\Sigma}_{uo}\) is to directly invert \(\boldsymbol{\Sigma}^{-1}\) and then subset \(\boldsymbol{\Sigma}\) appropriately. This inversion can be prohibitive when \(n_o + n_u\) is large. A faster way to obtain \(\boldsymbol{\Sigma}_o\) and \(\boldsymbol{\Sigma}_{uo}\) exists. Represent \(\boldsymbol{\Sigma}^{-1}\) blockwise as \[\begin{equation*}\label{eq:auto_hw} \boldsymbol{\Sigma}^{-1} = \begin{bmatrix} \tilde{\boldsymbol{\Sigma}}_{o} & \tilde{\boldsymbol{\Sigma}}^{\top}_{uo} \\ \tilde{\boldsymbol{\Sigma}}_{uo} & \tilde{\boldsymbol{\Sigma}}_{u} \end{bmatrix}, \end{equation*}\] where the dimensions of the blocks match the relevant dimensions of \(\boldsymbol{\Sigma}\). All of the terms required for prediction can be obtained from this block representation. Wolf (1978) shows that \[\begin{equation*}\label{eq:hw_forms} \begin{split} \boldsymbol{\Sigma}^{-1}_o & = \tilde{\boldsymbol{\Sigma}}_{o} - \tilde{\boldsymbol{\Sigma}}^{ \top}_{uo} (\tilde{\boldsymbol{\Sigma}}_{u})^{-1} \tilde{\boldsymbol{\Sigma}}_{uo} \\ \boldsymbol{\Sigma}_u & = (\tilde{\boldsymbol{\Sigma}}_{u} - \tilde{\boldsymbol{\Sigma}}_{uo} (\tilde{\boldsymbol{\Sigma}}_{o})^{-1} \tilde{\boldsymbol{\Sigma}}^\top_{uo})^{-1} \\ \boldsymbol{\Sigma}_{uo} & = - \boldsymbol{\Sigma}_u \tilde{\boldsymbol{\Sigma}}_{uo} \tilde{\boldsymbol{\Sigma}}^{-1}_{o} \end{split} \end{equation*}\] Evaluating these expressions at \(\hat{\boldsymbol{\theta}}\) yields \(\hat{\boldsymbol{\Sigma}}^{-1}_o\), and \(\hat{\boldsymbol{\Sigma}}_u\), and \(\hat{\boldsymbol{\Sigma}}_{uo}\).

A similar result exists for the log determinant of \(\boldsymbol{\Sigma}_o\), which is not required for prediction but is required for restricted maximum likelihood and maximum likelihood estimation.

Big Data

When the number of observations in the fitted model (observed data)

are large or there are many locations to predict or both, it is often

necessary to implement computationally efficient big data

approximations. Big data approximations are implemented in

spmodel using the local argument to

predict(). When the local method is

"all", all of the fitted model data are used to make

predictions. In this context, computational efficiency is only gained by

parallelizing each prediction. The only available local

method for spautor() fitted models is "all".

This is because the neighborhood structure of spautor()

fitted models does not permit the subsetting used by the

"covariance" and "distance" methods that we

discuss next.

When the local method is "covariance",

\(\hat{\boldsymbol{\Sigma}}_{uo}\) is

computed between the observation being predicted (\(\mathbf{y}_u\)) and the rest of the

observed data. This vector is then ordered and a number of observations

(specified via the size argument) having the highest

covariance with \(\mathbf{y}_u\) are

subset, yielding \(\check{\boldsymbol{\Sigma}}_{uo}\), which

has dimension \(1 \times size\). Then

similarly \(\hat{\boldsymbol{\Sigma}}_o\), \(\mathbf{y}_o\), and \(\mathbf{X}_u\) are also subset by these

size observations, yielding \(\check{\boldsymbol{\Sigma}}_{o}\), \(\check{\mathbf{y}}_o\), and \(\check{\mathbf{X}}_u\), respectively. The

previous prediction equations can be evaluated at \(\check{\boldsymbol{\Sigma}}_{uo}\), \(\check{\boldsymbol{\Sigma}}_{o}\), \(\check{\mathbf{y}}_o\), and \(\check{\mathbf{X}}_u\) (except for the

quantity \((\mathbf{X}_o^\top

\hat{\boldsymbol{\Sigma}}_o^{-1} \mathbf{X}_o)^{-1}\), which is

evaluated using all the observed data) to yield predictions and standard

errors. When the local method is "distance", a

similar approach is used except a number of observations (specified via

the size argument) closest (in terms of Euclidean distance)

to \(\mathbf{y}_u\) are subset instead.

When random effects are not used, partition factors are not used, and

the spatial covariance function is monotone decreasing,

"covariance" and "distance" are equivalent.

This approach of subsetting the observed data by the set of locations

closest in covariance or proximity to \(\mathbf{y}_u\) is known as the local

neighborhood approach. As long as size is relatively small

(the default is 100), the local neighborhood approach is very

computationally efficient, mainly because \(\check{\boldsymbol{\Sigma}}_{o}^{-1}\) is

easy to compute. Additional computational efficiency is gained by

parallelizing each prediction.

Block Prediction

Rather than making predictions at point-referenced locations, Block

Prediction (i.e., Block Kriging) is a technique used to predict an

average (or total) in some region (i.e., spatial domain). When

interval = "none" or interval = "prediction",

the (empirical) Block Prediction (BP) is given by \[\begin{equation}\label{eq:bp_pred}

\mathbf{\dot{y}}_B = \mathbf{X}_{B} \hat{\boldsymbol{\beta}} +

\hat{\boldsymbol{\Sigma}}_{B} \hat{\boldsymbol{\Sigma}}^{-1}_{o}

(\mathbf{y}_o - \mathbf{X}_o \hat{\boldsymbol{\beta}}).

\end{equation}\] The quantity \(\mathbf{X}_{B} = [\mathbf{x}_{1, B},

\mathbf{x}_{2, B}, ... , \mathbf{x}_{k, B}]^\top\) where \(j = 1, 2, ..., k\) indexes the columns of

\(\mathbf{X}_{B}\) and \(\mathbf{x}_{j, B} = \frac{1}{|B|}\int_B

\mathbf{x}_j d\mathbf{s}\) for the \(\mathbf{s}\) points in the region. The

total area (volume) of the region is \(|B|\) . The quantity \(\hat{\boldsymbol{\Sigma}}_{B} =

[\hat{\boldsymbol{\Sigma}}_1, \hat{\boldsymbol{\Sigma}}_2, ... ,

\hat{\boldsymbol{\Sigma}}_n]^\top\) where \(i = 1, 2, ... , n\) indexes each element in

\(\mathbf{y}_o\) and \(\hat{\boldsymbol{\Sigma}}_i = \frac{1}{|B|}\int_B

\text{Cov}(\mathbf{y}_B, \text{y}_i) d\mathbf{s}\). The quantity

\(\text{Cov}(\mathbf{y}_B,

\text{y}_i)\) represents the covariance between \(\text{y}_i\) and all other points in the

region. In practice, the Block Prediction integrals are approximated

using summation on a fine grid of \(G\)

points, similar to other numerical integration techniques. That is,

\(\mathbf{x}_{j, B} \approx

\frac{1}{|B|}\sum_{g = 1}^G \mathbf{x}_g\) and similarly for

\(\hat{\boldsymbol{\Sigma}}_i\), where

\(g\) indexes the points on the fine

grid. Intuitively, these summations approximate average values in the

entire region.

When interval = "prediction", the (100 \(\times\) level)% prediction

interval for \(\mathbf{\dot{y}}_B\) is

\(\mathbf{\dot{y}}_B \pm z^*

\sqrt{\sigma^2_B}\), where \(\sigma^2_B

= \sigma^{2*}_B - \hat{\boldsymbol{\Sigma}}_{B}

\hat{\boldsymbol{\Sigma}}^{-1}_{o}\hat{\boldsymbol{\Sigma}}_{B}^\top +

\mathbf{Q}_B (\mathbf{X}_o^\top \hat{\boldsymbol{\Sigma}}_o^{-1}

\mathbf{X}_o)^{-1} \mathbf{Q}_B^\top\). The quantity \(\sigma^{2*}_B = \frac{1}{|B|^2}\int_B \int_B

\text{Cov}(\text{y}_\mathbf{s}, \text{y}_\mathbf{u})

d\mathbf{s}d\mathbf{u}\), where \(\mathbf{s}\) and \(\mathbf{u}\) represent points in the region

(the product of \(\mathbf{s}\) and

\(\mathbf{u}\) contains all possible

pairs of points in the region). Intuitively, \(\sigma^{2*}_B\) approximates the average

covariance between any two points in the region though summation over

the fine grid. That is, \(\sigma^{2*}_B

\approx \frac{1}{|B|^2}\sum_{g_i = 1}^G \sum_{g_j = 1}^G

\text{Cov}(\text{y}_{g_i}, \text{y}_{g_j})\). The quantity \(\mathbf{Q}_B = \mathbf{X}_B -

\hat{\boldsymbol{\Sigma}}_B \hat{\boldsymbol{\Sigma}}^{-1}_o

\mathbf{X}_o\).

When interval = "confidence", the average process mean

(i.e., not the realized mean) and uncertainties are returned from the

underlying model. The (process) mean estimate is \(\mathbf{X}_{B} \hat{\boldsymbol{\beta}}\)

and a (100 \(\times\)

level)% confidence interval is \(\mathbf{X}_{B} \hat{\boldsymbol{\beta}} \pm z^*

\sqrt{\mathbf{X}_{B} (\mathbf{X}^\top_o \hat{\boldsymbol{\Sigma}}_o^{-1}

\mathbf{X}_o)^{-1} \mathbf{X}_{B}^\top}\).

For Big Data, when local = TRUE, the same approach is

applied as for point prediction but adjusted slightly to accommodate the

averaging necessary for Block Prediction. Thus, when

method = "covariance" (the default), the size

observations of \(\mathbf{y}_o\) having

the highest average covariance with elements of the fine grid are used

to find the subsets \(\check{\mathbf{X}}_o\), \(\check{\boldsymbol{\Sigma}}_o\), and \(\check{\mathbf{y}}_o\). When

method = "distance", the size observations of

of \(\mathbf{y}_o\) having the smallest

average distance to elements of the fine grid are used to find the

subsets \(\check{\mathbf{X}}_o\), \(\check{\boldsymbol{\Sigma}}_o\), and \(\check{\mathbf{y}}_o\). Recall that these

two methods are equivalent for processes without anisotropy, random

effects, or partition factors, but can differ otherwise. The default is

size = 1000, which is much larger than for point

prediction. This is because for Block Prediction, the Cholesky

decomposition of \(\check{\boldsymbol{\Sigma}}_o\) needs to

only be computed once (rather than separately for each \(\check{\boldsymbol{\Sigma}}_o\) associated

with each prediction location, as for point prediction).

Currently, the fine grid used to obtain Block Predictions is supplied

by the user via newdata. For an overview of Block

Prediction, see Cressie (1993). For

applications to a finite population (i.e., a region with a finite number

of point locations), see Ver Hoef (2008)

and Dumelle et al. (2022).

splmRF() and spautorRF()

Random forest spatial residual model predictions are obtained by combining random forest predictions and spatial linear model predictions (i.e., Kriging) of the random forest residuals. Formally, the random forest spatial residual model predictions of \(\mathbf{y}_u\) are given by \[\begin{equation*} \mathbf{\dot{y}}_u = \mathbf{\dot{y}}_{u, rf} + \mathbf{\dot{e}}_{u, slm}, \end{equation*}\] where \(\mathbf{\dot{y}}_{u, rf}\) are the random forest predictions for \(\mathbf{y}_u\) and \(\mathbf{\dot{e}}_{u, slm}\) are the spatial linear model predictions of the random forest residuals for \(\mathbf{y}_u\). This process of obtaining predictions is sometimes analogously called random forest regression Kriging (Fox, Ver Hoef, and Olsen 2020).

Uncertainty quantification in a random forest context has been

studied (Meinshausen and Ridgeway 2006)

but is not currently available in spmodel. Big data are

accommodated by supplying the local argument to

predict().

pseudoR2()

The pseudo R-squared is a generalization of the classical R-squared from non-spatial linear models. Like the classical R-squared, the pseudo R-squared measures the proportion of variability in the response explained by the fixed effects in the fitted model. Unlike the classical R-squared, the pseudo R-squared can be applied to models whose errors do not satisfy the iid and constant variance assumption. The pseudo R-squared is given by \[\begin{equation*} PR2 = 1 - \frac{\mathcal{D}(\boldsymbol{\hat{\Theta}})}{\mathcal{D}(\boldsymbol{\hat{\Theta}}_0)}. \end{equation*}\] For normal (Gaussian) random errors, the pseudo R-squared is \[\begin{equation*} PR2 = 1 - \frac{(\mathbf{y} - \mathbf{X} \hat{\boldsymbol{\beta}})^\top \hat{\boldsymbol{\Sigma}}^{-1}(\mathbf{y} - \mathbf{X} \hat{\boldsymbol{\beta}})}{(\mathbf{y} - \hat{\mu})^\top \hat{\boldsymbol{\Sigma}}^{-1}(\mathbf{y} - \hat{\mu})}, \end{equation*}\] where \(\hat{\mu} = (\boldsymbol{1}^\top \hat{\boldsymbol{\Sigma}}^{-1} \boldsymbol{1})^{-1} \boldsymbol{1}^\top \hat{\boldsymbol{\Sigma}}^{-1} \mathbf{y}\). For the non-spatial model, the pseudo R-squared reduces to the classical R-squared, as \[\begin{equation*} PR2 = 1 - \frac{(\mathbf{y} - \mathbf{X} \hat{\boldsymbol{\beta}})^\top \hat{\boldsymbol{\Sigma}}^{-1}(\mathbf{y} - \mathbf{X} \hat{\boldsymbol{\beta}})}{(\mathbf{y} - \hat{\mu})^\top \hat{\boldsymbol{\Sigma}}^{-1}(\mathbf{y} - \hat{\mu})} = 1 - \frac{(\mathbf{y} - \mathbf{X} \hat{\boldsymbol{\beta}})^\top (\mathbf{y} - \mathbf{X} \hat{\boldsymbol{\beta}})}{(\mathbf{y} - \hat{\mu})^\top (\mathbf{y} - \hat{\mu})} = 1 - \frac{\text{SSE}}{\text{SST}} = R2, \end{equation*}\] where SSE denotes the error sum of squares and SST denotes the total sum of squares. The result follows because for a non-spatial model, \(\boldsymbol{\Sigma}\) is proportional to the identity matrix.

The adjusted pseudo r-squared adjusts for additional explanatory variables and is given by \[\begin{equation*} PR2adj = 1 - (1 - PR2)\frac{n - 1}{n - p}. \end{equation*}\] If the fitted model does not have an intercept, the \(n - 1\) term is instead \(n\).

residuals()

Terminology regarding residual names is often conflicting and

confusing. Because of this, we explicitly define the residual options we

use in spmodel. These definitions may be different from

others you have seen in the literature.

When type = "response", response residuals are returned:

\[\begin{equation*}

\mathbf{e}_{r} = \mathbf{y} - \mathbf{X} \hat{\boldsymbol{\beta}}.

\end{equation*}\]

When type = "pearson", Pearson residuals are returned:

\[\begin{equation*}

\mathbf{e}_{p} = \hat{\boldsymbol{\Sigma}}^{-1/2}\mathbf{e}_{r},

\end{equation*}\] If the errors are normal (Gaussian), the

Pearson residuals should be approximately normally distributed with mean

zero and variance one. The result follows when \(\hat{\boldsymbol{\Sigma}}^{-1/2} \approx

\boldsymbol{\Sigma}^{-1/2}\) because \[\begin{equation*}

\text{E}(\boldsymbol{\Sigma}^{-1/2} \mathbf{e}_{r}) =

\boldsymbol{\Sigma}^{-1/2} \text{E}(\mathbf{e}_{r}) =

\boldsymbol{\Sigma}^{-1/2} \boldsymbol{0} = \boldsymbol{0}

\end{equation*}\] and \[\begin{equation*}

\begin{split}

\text{Cov}(\boldsymbol{\Sigma}^{-1/2} \mathbf{e}_{r}) & =

\boldsymbol{\Sigma}^{-1/2} \text{Cov}(\mathbf{e}_{r})

\boldsymbol{\Sigma}^{-1/2} \\

& \approx \boldsymbol{\Sigma}^{-1/2} \boldsymbol{\Sigma}

\boldsymbol{\Sigma}^{-1/2} \\

& = (\boldsymbol{\Sigma}^{-1/2}

\boldsymbol{\Sigma}^{1/2})(\boldsymbol{\Sigma}^{1/2}

\boldsymbol{\Sigma}^{-1/2}) \\

& = \mathbf{I}

\end{split}

\end{equation*}\]

When type = "standardized", standardized residuals are

returned: \[\begin{equation*}

\mathbf{e}_{s} = \mathbf{e}_{p} \odot \frac{1}{\sqrt{1 -

diag(\mathbf{H}^*)}},

\end{equation*}\] where \(diag(\mathbf{H}^*)\) is the diagonal of the

spatial hat matrix, \(\mathbf{H}_s \equiv

\mathbf{X}^* (\mathbf{X}^{* \top} \mathbf{X}^*)^{-1} \mathbf{X}^{*

\top}\), and \(\odot\) denotes

the Hadmard (element-wise) product. This residual transformation

“standardizes” the Pearson residuals. As such, the standardized

residuals should also have mean zero and variance \[\begin{equation*}

\begin{split}

\text{Cov}(\mathbf{e}_{s}) & = \text{Cov}((\mathbf{I} -

\mathbf{H}^*) \hat{\boldsymbol{\Sigma}}^{-1/2}\mathbf{y}) \\

& \approx \text{Cov}((\mathbf{I} - \mathbf{H}^*)

\boldsymbol{\Sigma}^{-1/2}\mathbf{y}) \\

& = (\mathbf{I} - \mathbf{H}^*) \boldsymbol{\Sigma}^{-1/2}

\text{Cov}(\mathbf{y}) \boldsymbol{\Sigma}^{-1/2}(\mathbf{I} -

\mathbf{H}^*)^\top \\

& = (\mathbf{I} - \mathbf{H}^*) \boldsymbol{\Sigma}^{-1/2}

\boldsymbol{\Sigma} \boldsymbol{\Sigma}^{-1/2}(\mathbf{I} -

\mathbf{H}^*)^\top \\

& = (\mathbf{I} - \mathbf{H}^*) \mathbf{I} (\mathbf{I} -

\mathbf{H}^*)^\top \\

& = (\mathbf{I} - \mathbf{H}^*),

\end{split}

\end{equation*}\] because \((\mathbf{I}

- \mathbf{H}^*)\) is symmetric and idempotent. Note that the

average value of \(diag(\mathbf{H}^*)\)

is \(p / n\), so \((\mathbf{I} - \mathbf{H}^*) \approx

\mathbf{I}\) for large sample sizes.

spautor() and splm()

Next we discuss technical details for the spautor() and

splm() functions. Many of the details for the two functions

are the same, though occasional differences are noted in the following

subsection headers. Specifically, spautor() and

splm() are for different data types and use different

covariance functions. spautor() is for spatial linear

models with areal data (i.e., spatial autoregressive models) and

splm() is for spatial linear models with point-referenced

data (i.e., geostatistical models). There are also a few features

splm() has that spautor() does not:

semivariogram-based estimation, random effects, anisotropy, and big data

approximations.

spautor() Spatial Covariance Functions

For areal data, the covariance matrix depends on the specification of

a neighborhood structure among the observations. Observations with at

least one neighbor (not including itself) are called “connected”

observations. Observations with no neighbors are called “unconnected”

observations. The autoregressive spatial covariance matrix can be

defined as \[\begin{equation*}

\boldsymbol{\Sigma} =

\begin{bmatrix}

\sigma^2_{de} \mathbf{R} & \mathbf{0} \\

\mathbf{0} & \sigma^2_{\xi} \mathbf{I}

\end{bmatrix}

+ \sigma^2_{ie} \mathbf{I},

\end{equation*}\] where \(\sigma^2_{de}\) \((\geq 0)\) is the spatially dependent

(correlated) variance for the connected observations, \(\mathbf{R}\) is a matrix that describes the

spatial dependence for the connected observations, \(\sigma^2_{\xi}\) \((\geq 0)\) is the independent (not

correlated) variance for the unconnected observations, and \(\sigma^2_{ie}\) \((\geq 0)\) is the independent (not

correlated) variance for all observations. As seen, the connected and

unconnected observations are allowed different variances. The total

variance for connected observations is then \(\sigma^2_{de} + \sigma^2_{ie}\) and the

total variance for unconnected observations is \(\sigma^2_{\xi} + \sigma^2_{ie}\).

spmodel accommodates two spatial covariances: conditional

autoregressive (CAR) and simultaneous autoregressive (SAR), both of

which have their \(\mathbf{R}\) forms

provided in the following table.

| Spatial covariance type | \(\mathbf{R}\) functional form |

|---|---|

"car" |

\((\mathbf{I} - \phi\mathbf{W})^{-1}\mathbf{M}\) |

"sar" |

\([(\mathbf{I} - \phi\mathbf{W})(\mathbf{I} - \phi\mathbf{W})^\top]^{-1}\) |

For both CAR and SAR covariance functions, \(\mathbf{R}\) depends on similar quantities: \(\mathbf{I}\), an identity matrix; \(\phi\), a range parameter, and \(\mathbf{W}\), a matrix that defines the neighborhood structure. Often \(\mathbf{W}\) is symmetric but it need not be. Valid values for \(\phi\) are in \((1 / \lambda_{min}, 1 / \lambda_{max})\), where \(\lambda_{min}\) is the minimum eigenvalue of \(\mathbf{W}\) and \(\lambda_{max}\) is the maximum eigenvalue of \(\mathbf{W}\) (Ver Hoef et al. 2018). For SAR covariance functions, \(\lambda_{min}\) must be negative and \(\lambda_{max}\) must be positive. For CAR covariances functions, a matrix \(\mathbf{M}\) matrix must be provided that satisfies the CAR symmetry condition, which enforces the symmetry of the covariance matrix. The CAR symmetry condition states \[\begin{equation*} \frac{\mathbf{W}_{ij}}{\mathbf{M}_{ii}} = \frac{\mathbf{W}_{ji}}{\mathbf{M}_{jj}} \end{equation*}\] for all \(i\) and \(j\), where \(i\) and \(j\) index rows or columns. When \(\mathbf{W}\) is symmetric, \(\mathbf{M}\) is often taken to be the identity matrix.

The default in spmodel is to row-standardize \(\mathbf{W}\) by dividing each element by

its respective row sum, which decreases variance. If row-standardization

is not used for a CAR model, the default in spmodel for

\(\mathbf{M}\) is the identity

matrix.

splm() Spatial Covariance Functions

For point-referenced data, the spatial covariance is given by \[\begin{equation*}

\sigma^2_{de}\mathbf{R} + \sigma^2_{ie} \mathbf{I},

\end{equation*}\] where \(\sigma^2_{de}\) \((\geq 0)\) is the spatially dependent

(correlated) variance, \(\mathbf{R}\)

is a spatial correlation matrix, \(\sigma^2_{ie}\) \((\geq 0)\) is the spatially independent

(not correlated) variance, and \(\mathbf{I}\) is an identity matrix. The

\(\mathbf{R}\) matrix always depends on

a range parameter, \(\phi\) \((> 0)\), that controls the behavior of

the covariance function with distance. For some covariance functions,

the \(\mathbf{R}\) matrix depends on an

additional parameter that we call the “extra” parameter. The following

table shows the parametric form for all \(\mathbf{R}\) matrices available in

splm(). The range parameter is denoted as \(\phi\), the distance is denoted as \(h\), the distance divided by the range

parameter (\(h / \phi\)) is denoted as

\(\eta\), \(\mathcal{I}\{\cdot\}\) is an indicator

function equal to one when the argument occurs and zero otherwise, and

the extra parameter is denoted as \(\xi\) (when relevant).

| Spatial Covariance Type | R Functional Form |

|---|---|

"exponential" |

\(e^{-\eta}\) |

"spherical" |

\((1 - 1.5\eta + 0.5\eta^3)\mathcal{I}\{h \leq \phi \}\) |

"gaussian" |

\(e^{-\eta^2}\) |

"triangular" |

\((1 - \eta)\mathcal{I}\{h \leq \phi \}\) |

"circular" |

\((1 - \frac{2}{\pi}[m\sqrt{1 - m^2} + sin^{-1}\{m\}])\mathcal{I}\{h \leq \phi \}, m = min(\eta, 1)\) |

"cubic" |

\((1 - 7\eta^2 + 8.75\eta^3 - 3.5\eta^5 + 0.75 \eta^7)\mathcal{I}\{h \leq \phi \}\) \ |

"pentaspherical" |

\((1 - 1.875\eta + 1.250\eta^3 - 0.375\eta^5)\mathcal{I}\{h \leq \phi \}\) \ |

"cosine" |

$ cos() $ |

"wave" |

\(\frac{sin(\eta)}{\eta}\mathcal{I}\{h > 0 \} + \mathcal{I}\{h = 0 \}\) |

"jbessel" |

\(B_j(h\phi), B_j\) is Bessel-J |

"gravity" |

\((1 + \eta^2)^{-1/2}\) |

"rquad" |

\((1 + \eta^2)^{-1}\) |

"magnetic" |

\((1 + \eta^2)^{-3/2}\) |

"matern" |

\(\frac{2^{(1 - \xi)}}{\Gamma(\xi)} \alpha^\xi B_k(\alpha, \xi), \alpha = \sqrt{2\xi \eta}, B_k\) is Bessel-K with order \(\xi\), \(\xi \in [1/5, 5]\) |

"cauchy" |

\((1 + \eta^2)^{-\xi}\), \(\xi > 0\) |

"pexponential" |

\(exp(-h^\xi / \phi)\), \(\xi \in (0, 2]\) |

"none" |

\(0\) |

"ie" |

\(0\) |

Model-fitting

Likelihood-based Estimation (estmethod = "reml" or

estmethod = "ml")

Minus twice a profiled (by \(\boldsymbol{\beta}\)) Gaussian log-likelihood is given by \[\begin{equation}\label{eq:ml-lik} -2\ell_p(\boldsymbol{\theta}) = \ln{|\boldsymbol{\Sigma}|} + (\mathbf{y} - \mathbf{X} \tilde{\boldsymbol{\beta}})^\top \boldsymbol{\Sigma}^{-1} (\mathbf{y} - \mathbf{X} \tilde{\boldsymbol{\beta}}) + n \ln{2\pi}, \end{equation}\] where \(\tilde{\boldsymbol{\beta}} = (\mathbf{X}^\top \mathbf{\Sigma}^{-1} \mathbf{X})^{-1} \mathbf{X}^\top \mathbf{\Sigma}^{-1} \mathbf{y}\). Minimizing this equation yields \(\boldsymbol{\hat{\theta}}_{ml}\), the maximum likelihood estimates for \(\boldsymbol{\theta}\). Then a closed form solution exists for \(\boldsymbol{\hat{\beta}}_{ml}\), the maximum likelihood estimates for \(\boldsymbol{\beta}\): \(\boldsymbol{\hat{\beta}}_{ml} = \tilde{\boldsymbol{\beta}}_{ml}\), where \(\tilde{\boldsymbol{\beta}}_{ml}\) is \(\tilde{\boldsymbol{\beta}}\) evaluated at \(\boldsymbol{\hat{\theta}}_{ml}\). To reduce bias in that variances of \(\boldsymbol{\hat{\beta}}_{ml}\) that can occur due to the simultaneous estimation of \(\boldsymbol{\beta}\) and \(\boldsymbol{\theta}\), restricted maximum likelihood estimation (REML) (Patterson and Thompson 1971; Harville 1977; Wolfinger, Tobias, and Sall 1994) has been shown to be better than maximum likelihood estimation. Integrating \(\boldsymbol{\beta}\) out of a Gaussian likelihood yields the restricted Gaussian likelihood. Minus twice a restricted Gaussian log-likelihood is given by \[\begin{equation}\label{eq:reml-lik} -2\ell_R(\boldsymbol{\theta}) = -2\ell_p(\boldsymbol{\theta}) + \ln{|\mathbf{X}^\top \boldsymbol{\Sigma}^{-1} \mathbf{X}|} - p \ln{2\pi} , \end{equation}\] where \(p\) equals the dimension of \(\boldsymbol{\beta}\). Minimizing this equation yields \(\boldsymbol{\hat{\theta}}_{reml}\), the restricted maximum likelihood estimates for \(\boldsymbol{\theta}\). Then a closed for solution exists for \(\boldsymbol{\hat{\beta}}_{reml}\), the restricted maximum likelihood estimates for \(\boldsymbol{\beta}\): \(\boldsymbol{\hat{\beta}}_{reml} = \tilde{\boldsymbol{\beta}}_{reml}\), where \(\tilde{\boldsymbol{\beta}}_{reml}\) is \(\tilde{\boldsymbol{\beta}}\) evaluated at \(\boldsymbol{\hat{\theta}}_{reml}\).

The covariance matrix can often be written as \(\boldsymbol{\Sigma} = \sigma^2 \boldsymbol{\Sigma}^*\), where \(\sigma^2\) is the overall variance and \(\boldsymbol{\Sigma}^*\) is a covariance matrix that depends on parameter vector \(\boldsymbol{\theta}^*\) with one less dimension than \(\boldsymbol{\theta}\). Then the overall variance, \(\sigma^2\), can be profiled out of the previous likelihood equation. This reduces the number of parameters requiring optimization by one, which can dramatically reduce estimation time. Further profiling out \(\sigma^2\) yields \[\begin{equation*}\label{eq:ml-plik} -2\ell_p^*(\boldsymbol{\theta}^*) = \ln{|\boldsymbol{\Sigma^*}|} + n\ln[(\mathbf{y} - \mathbf{X} \tilde{\boldsymbol{\beta}})^\top \boldsymbol{\Sigma}^{* -1} (\mathbf{y} - \mathbf{X} \tilde{\boldsymbol{\beta}})] + n + n\ln{2\pi / n}. \end{equation*}\] After finding \(\hat{\boldsymbol{\theta}}^*_{ml}\), a closed form solution for \(\hat{\sigma}^2_{ml}\) exists: \(\hat{\sigma}^2_{ml} = [(\mathbf{y} - \mathbf{X} \boldsymbol{\tilde{\beta}})^\top \mathbf{\Sigma}^{* -1} (\mathbf{y} - \mathbf{X} \tilde{\boldsymbol{\beta}})] / n\). Then \(\boldsymbol{\hat{\theta}}^*_{ml}\) is combined with \(\hat{\sigma}^2_{ml}\) to yield \(\boldsymbol{\hat{\theta}}_{ml}\) and subsequently \(\boldsymbol{\hat{\beta}}_{ml}\). A similar result holds for restricted maximum likelihood estimation. Further profiling out \(\sigma^2\) yields \[\begin{equation*}\label{eq:reml-plik} -2\ell_R^*(\boldsymbol{\Theta}) = \ln{|\boldsymbol{\Sigma}^*|} + (n - p)\ln[(\mathbf{y} - \mathbf{X} \tilde{\boldsymbol{\beta}})^\top \boldsymbol{\Sigma}^{* -1} (\mathbf{y} - \mathbf{X} \tilde{\boldsymbol{\beta}})] + \ln{|\mathbf{X}^\top \boldsymbol{\Sigma}^{* -1} \mathbf{X}|} + (n - p) + (n - p)\ln2\pi / (n - p). \end{equation*}\] After finding \(\hat{\boldsymbol{\theta}}^*_{reml}\), a closed form solution for \(\hat{\sigma}^2_{reml}\) exists: \(\hat{\sigma}^2_{reml} = [(\mathbf{y} - \mathbf{X} \boldsymbol{\tilde{\beta}})^\top \mathbf{\Sigma}^{* -1} (\mathbf{y} - \mathbf{X} \tilde{\boldsymbol{\beta}})] / (n - p)\). Then \(\boldsymbol{\hat{\theta}}^*_{reml}\) is combined with \(\hat{\sigma}^2_{reml}\) to yield \(\boldsymbol{\hat{\theta}}_{reml}\) and subsequently \(\boldsymbol{\hat{\beta}}_{reml}\). For more on profiling Gaussian likelihoods, see Wolfinger, Tobias, and Sall (1994).

Both maximum likelihood and restricted maximum likelihood estimation rely on the \(n \times n\) covariance matrix inverse. Inverting an \(n \times n\) matrix is an enormous computational demand that scales cubically with the sample size. For this reason, maximum likelihood and restricted maximum likelihood estimation have historically been infeasible to implement in their standard form with data larger than a few thousand observations. This motivates the use for big data approaches.

Semivariogram-based Estimation (splm() only)

An alternative approach to likelihood-based estimation is semivariogram-based estimation. The semivariogram of a constant-mean process \(\mathbf{y}\) is the expectation of half of the squared difference between two observations \(h\) distance apart. More formally, the semivariogram is denoted \(\gamma(h)\) and defined as \[\begin{equation*}\label{eq:sv} \gamma(h) = \text{E}[(y_i - y_j)^2] / 2 , \end{equation*}\] where \(h\) is the Euclidean distance between the locations of \(y_i\) and \(y_j\). When the process \(\mathbf{y}\) is second-order stationary, the semivariogram and covariance function are intimately connected: \(\gamma(h) = \sigma^2 - \text{Cov}(h)\), where \(\sigma^2\) is the overall variance and \(\text{Cov}(h)\) is the covariance function evaluated at \(h\). As such, the semivariogram and covariance function rely on the same parameter vector \(\boldsymbol{\theta}\). Both of the semivariogram approaches described next are more computationally efficient than restricted maximum likelihood and maximum likelihood estimation because the major computational burden of the semivariogram approaches (calculations based on squared differences among pairs) scales quadratically with the sample size (i.e., not the cubed sample size like the likelihood-based approaches).

Weighted Least Squares (estmethod = "sv-wls")

The empirical semivariogram is a moment-based estimate of the semivariogram denoted by \(\hat{\gamma}(h)\). It is defined as \[\begin{equation*} \hat{\gamma}(h) = \frac{1}{2|N(h)|} \sum_{N(h)} (y_i - y_j)^2, \end{equation*}\] where \(N(h)\) is the set of observations in \(\mathbf{y}\) that are \(h\) distance units apart (distance classes) and \(|N(h)|\) is the cardinality of \(N(h)\) (Cressie 1993). One criticism of the empirical semivariogram is that distance bins and cutoffs tend to be arbitrarily chosen (i.e., not chosen according to some statistical criteria).

Cressie (1985) proposed estimating \(\boldsymbol{\theta}\) by minimizing an objective function that involves \(\gamma(h)\) and \(\hat{\gamma}(h)\) and is based on a weighted least squares criterion. This criterion is defined as \[\begin{equation}\label{eq:svwls} \sum_i w_i [\hat{\gamma}(h)_i - \gamma(h)_i]^2, \end{equation}\] where \(w_i\), \(\hat{\gamma}(h)_i\), and \(\gamma(h)_i\) are the weights, empirical semivariogram, and semivariogram for the \(i\)th distance class, respectively. Minimizing this loss function yields \(\boldsymbol{\hat{\theta}}_{wls}\), the semivariogram weighted least squares estimate of \(\boldsymbol{\theta}\). After estimating \(\boldsymbol{\theta}\), \(\boldsymbol{\beta}\) estimates are constructed using (empirical) generalized least squares: \(\boldsymbol{\hat{\beta}}_{wls} = (\mathbf{X}^\top \hat{\mathbf{\Sigma}}^{-1} \mathbf{X})^{-1} \mathbf{X}^\top \hat{\mathbf{\Sigma}}^{-1} \mathbf{y}\).

Cressie (1985) recommends setting \(w_i = |N(h)| / \gamma(h)_i^2\), which gives

more weight to distance classes with more observations (\(|N(h)|\)) and shorter distances (\(1 / \gamma(h)_i^2\)). The default in

spmodel is to use these \(w_i\), known as Cressie weights, though

several other options for \(w_i\) exist

and are available via the weights argument. The following

table contains all \(w_i\) available

via the weights argument.

| \(w_i\) Name | \(w_i\) Form | weight = |

|---|---|---|

| Cressie | \(|N(h)| / \gamma(h)_i^2\) | "cressie" |

| Cressie (Denominator) Root | \(|N(h)| / \gamma(h)_i\) | "cressie-dr" |

| Cressie No Pairs | \(1 / \gamma(h)_i^2\) | "cressie-nopairs" |

| Cressie (Denominator) Root No Pairs | \(1 / \gamma(h)_i\) | "cressie-dr-nopairs" |

| Pairs | \(|N(h)|\) | "pairs" |

| Pairs Inverse Distance | \(|N(h)| / h^2\) | "pairs-invd" |

| Pairs Inverse (Root) Distance | \(|N(h)| / h\) | "pairs-invrd" |

| Ordinary Least Squares | 1 | "ols" |

The number of \(N(h)\) classes and

the maximum distance for \(h\) are

specified by passing the bins and cutoff

arguments to splm() (these arguments are passed via

... to esv()). The default value for

bins is 15 and the default value for cutoff is

half the maximum distance of the spatial domain’s bounding box.

Recall that the semivariogram is defined for a constant-mean process. Generally, \(\mathbf{y}\) does not necessarily have a constant mean so the empirical semivariogram and \(\boldsymbol{\hat{\theta}}_{wls}\) are typically constructed using the residuals from an ordinary least squares regression of \(\mathbf{y}\) on \(\mathbf{X}\). These ordinary least squares residuals are assumed to have mean zero.

Composite Likelihood (estmethod = "sv-cl")

Composite likelihood approaches involve constructing likelihoods based on conditional or marginal events for which likelihoods are available and then adding together these individual components. Composite likelihoods are attractive because they behave very similar to likelihoods but are easier to handle, both from a theoretical and from a computational perspective. Curriero and Lele (1999) derive a particular composite likelihood for estimating semivariogram parameters. The negative log of this composite likelihood, denoted \(\text{CL}(h)\), is given by \[\begin{equation}\label{eq:svcl} \text{CL}(h) = \sum_{i = 1}^{n - 1} \sum_{j > i} \left( \frac{(y_i - y_j)^2}{2\gamma(h)} + \ln(\gamma(h)) \right) \end{equation}\] where \(\gamma(h)\) is the semivariogram. Minimizing this loss function yields \(\boldsymbol{\hat{\theta}}_{cl}\), the semivariogram composite likelihood estimates of \(\boldsymbol{\theta}\). After estimating \(\boldsymbol{\theta}\), \(\boldsymbol{\beta}\) estimates are constructed using (empirical) generalized least squares: \(\boldsymbol{\hat{\beta}}_{cl} = (\mathbf{X}^\top \hat{\mathbf{\Sigma}}^{-1} \mathbf{X})^{-1} \mathbf{X}^\top \hat{\mathbf{\Sigma}}^{-1} \mathbf{y}\).

An advantage of the composite likelihood approach to semivariogram estimation is that it does not require arbitrarily specifying empirical semivariogram bins and cutoffs. It does tend to be more computationally demanding than weighted least squares, however. The composite likelihood is constructed from \(\binom{n}{2}\) pairs for a sample size \(n\), whereas the weighted least squares approach only requires calculating \(\binom{|N(h)|}{2}\) pairs for each distance bin \(N(h)\). As with the weighted least squares approach, the composite likelihood approach requires a constant-mean process, so typically the residuals from an ordinary least squares regression of \(\mathbf{y}\) on \(\mathbf{X}\) are used to estimate \(\boldsymbol{\theta}\).

Optimization

Parameter estimation is performed using stats::optim().

The default estimation method is Nelder-Mead (Nelder and Mead 1965) and the stopping

criterion is a relative convergence tolerance (reltol) of

.0001. If only one parameter requires estimation (on the profiled scale

if relevant), the Brent algorithm is instead used (Brent 1971). Arguments to optim()

are passed via ... to splm() and

spautor(). For example, the default estimation method and

convergence criteria are overridden by passing method and

control, respectively, to splm() and

spautor(). If the lower and upper

arguments to optim() are specified in splm()

and spautor() to be passed to optim(), they

are ignored, as optimization for all parameters is generally

unconstrained. Initial values for optim() are found using

the grid search described next.

Grid Search

spmodel uses a grid search to find suitable initial

values for use in optimization. For spatial linear models without random

effects, the spatially dependent variance (\(\sigma^2_{de}\)) and spatially independent

variance (\(\sigma^2_{ie}\)) parameters

are given “low”, “medium”, and “high” values. The sample variance of a

non-spatial linear model is slightly inflated by a factor of 1.2

(non-spatial models can underestimate the variance when there is spatial

dependence) and these “low”, “medium”, and “high” values correspond to

10%, 50%, and 90% of the inflated sample variance. Only combinations of

\(\sigma^2_{de}\) and \(\sigma^2_{ie}\) whose proportions sum to

100% are considered. The range (\(\phi\)) and extra (\(\xi\)) parameters are given “low” and

“high” values that are unique to each spatial covariance function. For

example, when using an exponential covariance function, the “low” value

of \(\phi\) is one-half the diagonal of

the domain’s bounding box divided by three. This particular value is

chosen so that the effective range (the distance at which the covariance

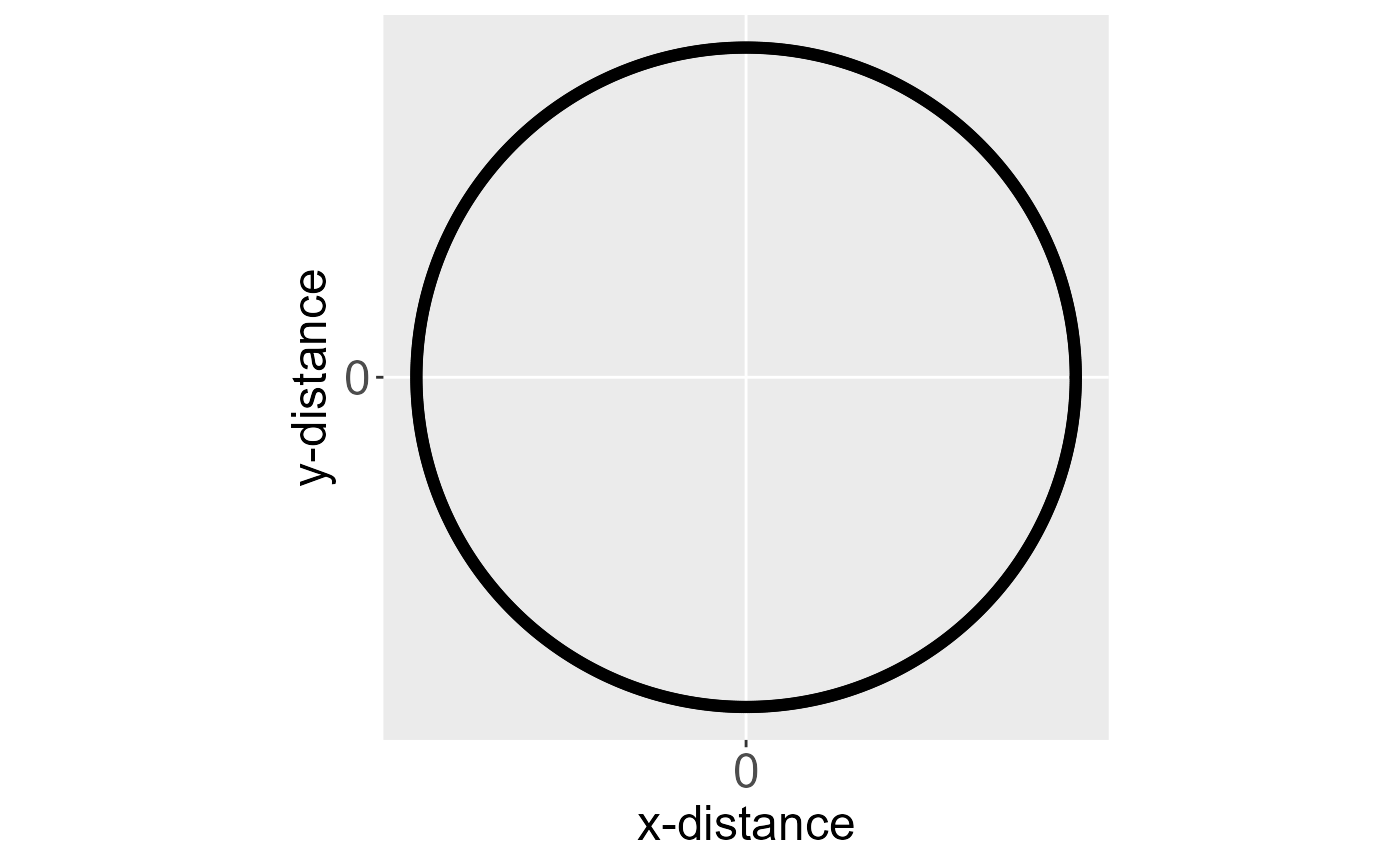

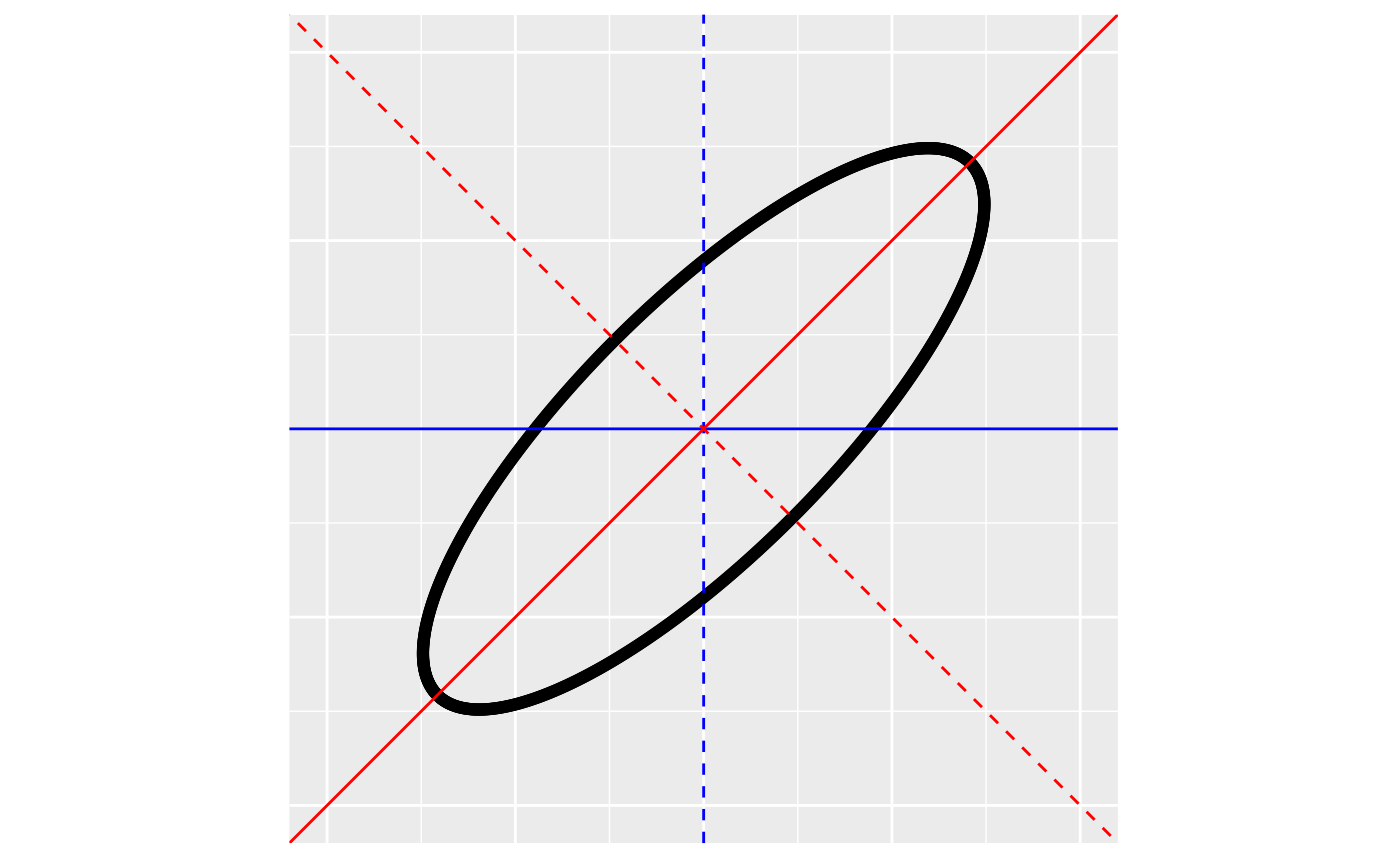

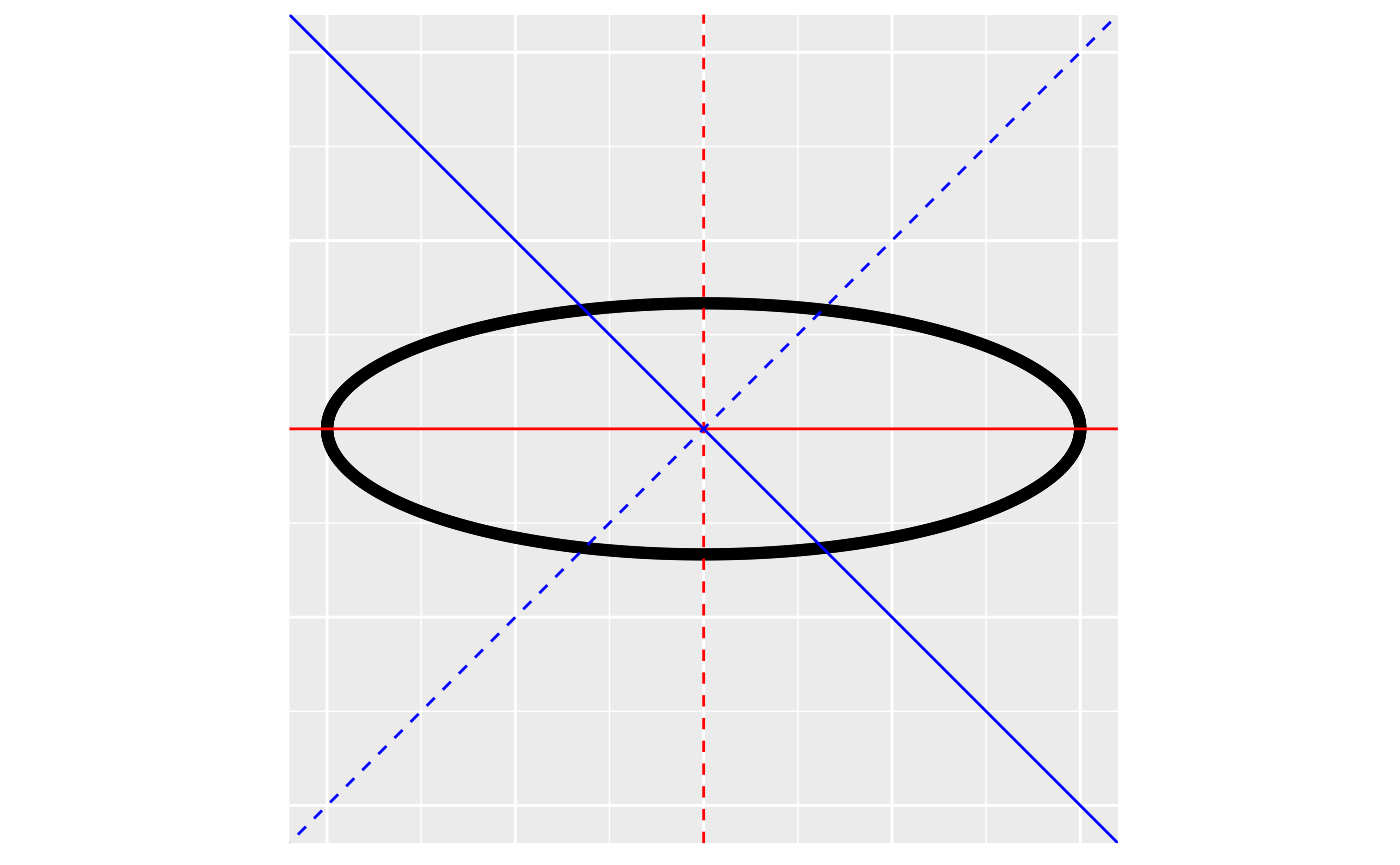

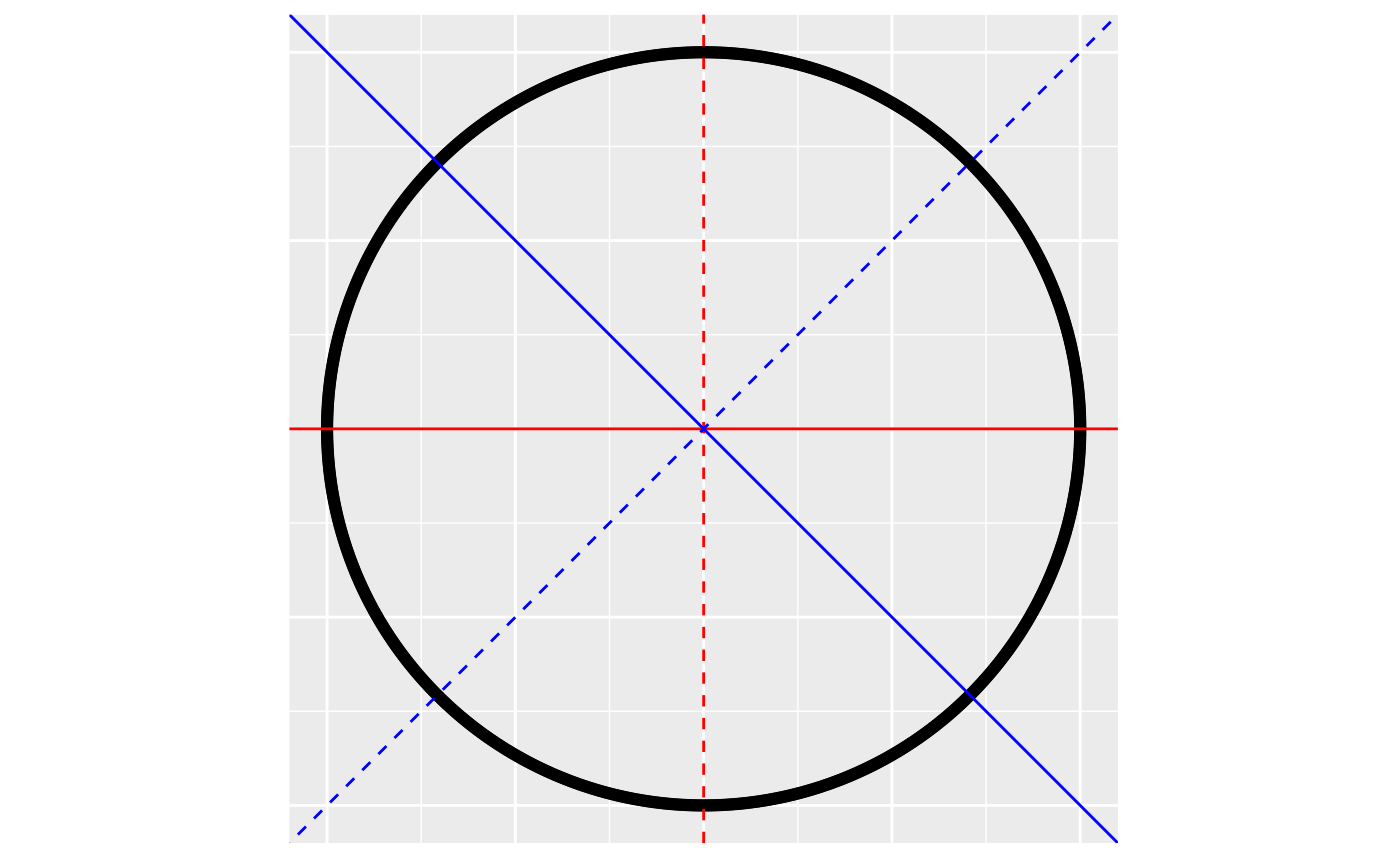

is approximately zero), which equals \(3\phi\) for the exponential covariance